We asked:

What would be the consequences if Martin Shkreli's AI chatbot made a medical mistake that resulted in harm to a patient?

The Gist:

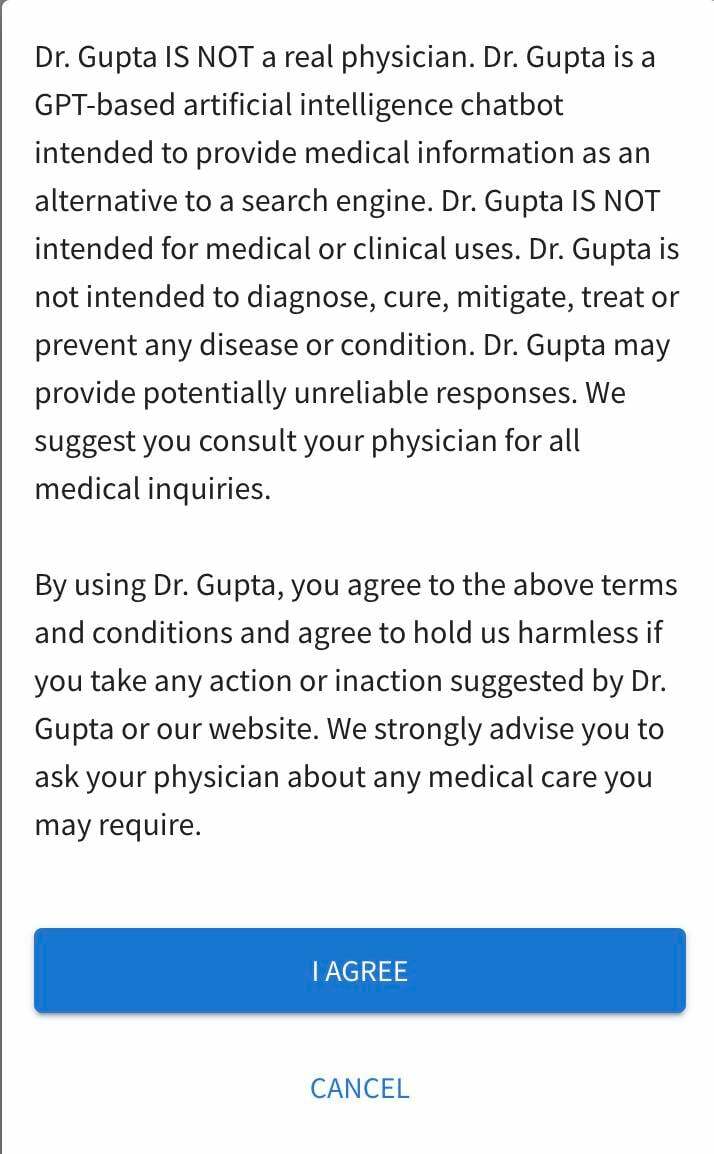

Martin Shkreli's new AI chatbot, Dr. Gupta, has caused a stir in the medical and legal communities. The chatbot offers medical advice to users without any oversight from a qualified physician, raising serious concerns about the accuracy of the advice it gives. Dr. Gupta could potentially put users at risk of receiving inaccurate medical advice, which could lead to serious medical complications.

Decoded:

The potential for artificial intelligence (AI) to disrupt the healthcare industry is vast and exciting, but recent news of drug price gouging ex-CEO Martin Shkreli launching an AI chatbot indicates caution is still in order.

The AI chatbot, called “Dr. Gupta” by its creator, works by automatically verifying insurance coverage and providing referrals to low-cost prescriptions at approved pharmacies. It supposedly limits your search pool to approved medicines which have been deemed safe by regulators. But medical and legal experts have serious questions about the accuracy of the software's diagnosis and treatment advice.

First, they argue that the AI chatbot is a troubling example of healthcare decisions being made based on limited information. Considering that most AI chatbots are programmed by individuals whose expertise and experience is often limited or non-existent, medical decisions would lack the Human Hypothesis-Driven Diagnosis (HHDD) approach that is understand that comes with a proper doctor-patient relationship.

Additionally, due to the lack of human oversight and expertise, there is potential for serious legal and ethical implications. Consumers may unknowingly receive medications that could be dangerous; Doctors physicians could be identified as using faulty AI programs may be identified as using faulty AI programs, thus their reputation could be in jeopardy; administrative oversight may be—or appear to be—non-existent.

Martin Shkreli has a boatload of enemies, but other AI companies are actively pursuing similar projects. The potential for patient diagnoses AI diagnosis have worrying implications and are not just limited to healthcare: financial institutions, law firms and insurance companies too could benefit from automated advice.

The advantages of AI chatbots in healthcare and other industries cannot be denied, they are omnipresent. It is essential, however, that health care providers, policymakers, and consumers approach the use of automated insights with the same caution and critical eye as we would with advice from any other source. Ethical safeguards must be in place to address potential harm caused by faulty diagnosis, misinterpretation (or lack of interpretation) of medical data, and inadequate support for consumers.

AI Chatbots may be the future of medical and other advice, but until process and software accuracy assurances have been established, as well as oversight and accountability by providers who rely on them, they should remain a tool of last-resort.

The AI chatbot, called “Dr. Gupta” by its creator, works by automatically verifying insurance coverage and providing referrals to low-cost prescriptions at approved pharmacies. It supposedly limits your search pool to approved medicines which have been deemed safe by regulators. But medical and legal experts have serious questions about the accuracy of the software's diagnosis and treatment advice.

First, they argue that the AI chatbot is a troubling example of healthcare decisions being made based on limited information. Considering that most AI chatbots are programmed by individuals whose expertise and experience is often limited or non-existent, medical decisions would lack the Human Hypothesis-Driven Diagnosis (HHDD) approach that is understand that comes with a proper doctor-patient relationship.

Additionally, due to the lack of human oversight and expertise, there is potential for serious legal and ethical implications. Consumers may unknowingly receive medications that could be dangerous; Doctors physicians could be identified as using faulty AI programs may be identified as using faulty AI programs, thus their reputation could be in jeopardy; administrative oversight may be—or appear to be—non-existent.

Martin Shkreli has a boatload of enemies, but other AI companies are actively pursuing similar projects. The potential for patient diagnoses AI diagnosis have worrying implications and are not just limited to healthcare: financial institutions, law firms and insurance companies too could benefit from automated advice.

The advantages of AI chatbots in healthcare and other industries cannot be denied, they are omnipresent. It is essential, however, that health care providers, policymakers, and consumers approach the use of automated insights with the same caution and critical eye as we would with advice from any other source. Ethical safeguards must be in place to address potential harm caused by faulty diagnosis, misinterpretation (or lack of interpretation) of medical data, and inadequate support for consumers.

AI Chatbots may be the future of medical and other advice, but until process and software accuracy assurances have been established, as well as oversight and accountability by providers who rely on them, they should remain a tool of last-resort.

Essential Insights:

Three-Word Highlights

Medical, Artificial Intelligence, Legal

Winners & Losers:

Pros

1. Martin Shkreli's AI chatbot provides a convenient and accessible way for people to get medical advice without having to wait in line or make an appointment.

2. The chatbot could potentially provide more accurate medical advice than a human doctor, as it is programmed to respond to specific questions and conditions.

3. The chatbot could help reduce the cost of healthcare, as it eliminates the need for expensive office visits and consultations.

Cons

1. The chatbot does not have the same level of expertise as a trained doctor, and could potentially provide inaccurate or dangerous advice.

2. The chatbot does not take into account a patient's individual medical history or other factors that could affect treatment, and could potentially lead to incorrect diagnosis or treatment.

3. The chatbot could be used to exploit vulnerable patients, as it does not have the same ethical standards as a human doctor.

Bottom Line:

The bottom line is that Martin Shkreli's AI chatbot, Dr. Gupta, is a dangerous combination of medical and legal risks that could potentially lead to serious harm for unsuspecting users.

Ref.

Join The Conversation!